Using a standard RGB camera and AI to obtain vegetation data

URBANA, Ill. – Aerial imagery is a valuable component of precision agriculture, providing farmers with important information about crop health and yield. Images are typically obtained with an expensive multispectral camera attached to a drone. But a new study from the University of Illinois and Mississippi State University (MSU) shows that pictures from a standard red-green-blue (RGB) camera combined with AI deep learning can provide equivalent crop prediction tools for a fraction of the cost.

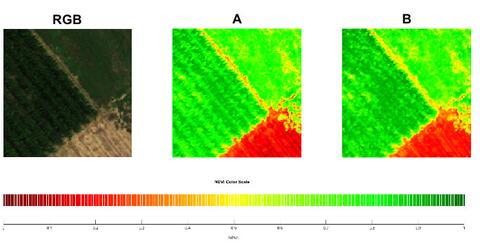

Multispectral cameras provide color maps that represent vegetation to help farmers monitor plant health and spot problem areas. Vegetation indices such as the Normalized Difference Vegetation Index (NDVI) and Normalized Difference Red Edge Index (NDRE) display healthy areas as green, while problem areas show up as red.

“Typically, to do this you would need to have a near-infrared camera (NIR) that costs about $5,000. But we have shown that we can train AI to generate NDVI-like images using an RGB camera attached to a low-cost drone, and that reduces the cost significantly,” says Girish Chowdhary, associate professor in the Department of Agricultural and Biological Engineering at U of I and co-author on the paper.

For this study, the research team collected aerial images from corn, soybean, and cotton fields at various growth stages with both a multispectral and an RGB camera. They used Pix2Pix, a neural network designed for image conversion, to translate the RGB images into NDVI and NDRE color maps with red and green areas. After first training the network with a large number of both multispectral and regular pictures, they tested its ability to generate NDVI/NDRE pictures from another set of regular images.

“There is a reflective greenness index in the photos that indicates photosynthetic efficiency. It reflects a little bit in the green channel, and a lot in the near-infrared channel. But we have created a network that can extract it from the green channel by training it on the NIR channel. This means we only need the green channel, along with other contextual information such as red, blue and green pixels,” Chowdhary explains.

To test the accuracy of the AI-generated images, the researchers asked a panel of crop specialists to view side-by-side images of the same areas, either generated by AI or taken with a multispectral camera. The specialists indicated if they could tell which one was the true multispectral image, and whether they noticed any differences that would affect their decision making.

The experts found no observable differences between the two sets of images, and they indicated they would make similar predictions from both. The research team also tested the comparison of images through statistical procedures, confirming there were virtually no measurable differences between them.

Joby Czarnecki, associate research professor at MSU and co-author on the paper, cautions that this doesn’t mean the two sets of images are identical.

“While we can’t say the images would provide the same information under all conditions, for this particular issue, they allow for similar decisions. Near-infrared reflectance can be very critical for some plant decisions. However, in this particular case, it’s exciting that our study shows you can replace an expensive technology with inexpensive artificial intelligence and still arrive at the same decision,” she explains.

The aerial view can provide information that is difficult to obtain from the ground. For example, areas of storm damage or nutrient deficiencies may not be easily visible at eye level, but can be spotted easily from the air. Farmers with the appropriate authorizations may choose to fly their own drones, or they may contract a private company to do so. Either way, the color maps provide important crop health information needed for management decisions.

The AI software and procedures used in the study are available for companies that want to implement it or expand the usage by training the network on additional datasets.

“There's a lot of potential in AI to help reduce costs, which is a key driver for many applications in agriculture. If you can make a $600 drone more useful, then everybody can access it. And the information would help farmers improve yield and be better stewards of their land,” Chowdhary concludes.

The Department of Agricultural and Biological Engineering is in the College of Agricultural, Consumer and Environmental Sciences and The Grainger College of Engineering at the University of Illinois.

The paper, “NDVI/NDRE prediction from standard RGB aerial imagery using deep learning,” is published in Computers and Electronics in Agriculture [https://doi.org/10.1016/j.compag.2022.107396]. Authors include Corey Davidson, Vishnu Jaganathan, Arun Narenthiran Sivakumar, Joby Czarnecki and Girish Chowdhary.

This work was supported by the USDA National Institute of Food and Agriculture.